Moving to GDDR4:

GDDR3 had a dramatic speed increase toward the end of last year when NVIDIA announced its GeForce 7800 GTX 512. In the space of a few weeks, we went from NVIDIA's GeForce 7800 GTX being the video card with the fastest memory on-board - clocked at 1200MHz - up to 1500MHz on the Radeon X1800XT and then right up to 1700MHz for the GeForce 7800 GTX 512 reference clocks.Availability (or lack thereof) of NVIDIA's GeForce 7800 GTX 512 was incredibly well-documented and it came as no surprise when both ATI and NVIDIA released product refreshes in the form of the Radeon X1900 and GeForce 7900 series' with lower memory frequencies than the reference 7800 GTX 512 clocks. It was almost like the two graphics giants hit a bit of a wall at around 1600MHz, even though they were both using 1.1ns modules. This is where GDDR4 takes over.

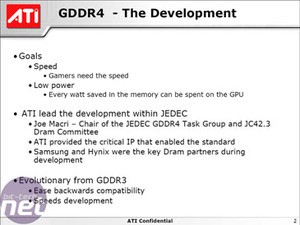

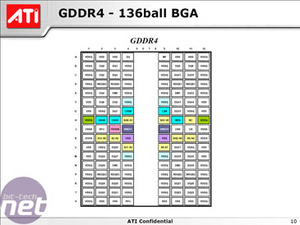

ATI played a heavy role in the development of GDDR4 in conjunction with JEDEC, Samsung and Hynix. The craving for more memory bandwidth in order to allow gamers to enable higher levels of anti-aliasing at higher resolutions has meant that more memory speed was required. Moving to a 512-bit memory interface would create a whole host of problems, because - amongst other things - the pin count on the back of GPU would need to be massively increased. This would result in a massively increased pin density, or a much larger GPU packaging - neither situation is ideal.

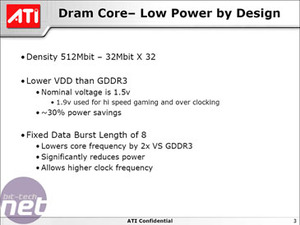

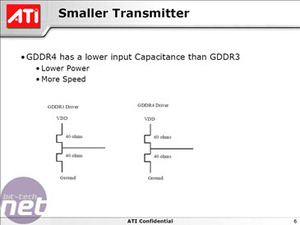

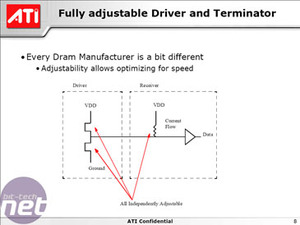

Increasing power requirements are also becoming an increasing large problem for both GPU manufacturers, so the ability to save power when craving for higher memory frequencies is a definite bonus on that front. The nominal voltage for GDDR4 is 1.5v - this is 25% lower than the nominal voltage for GDDR3. ATI says that this will save around 30% power consumption at the same clock frequency. Remember to take into account that the Radeon X1950XTX's memory frequency is set at 2000MHz when the card is running 3D mode - that's almost 25% higher than the X1900XTX's memory clock. Also, ATI states that "1.9v is used for high speed gaming and overclocking" - by this we're assuming that ATI is using a 1.9v vDD on the Radeon X1950XTX during 3D mode.

When gaming, it's possible that the card will draw more power than a Radeon X1900XTX, but it will draw much less power when it is idle. Since you don't game 24 hours a day, it's definitely a good thing to see the Radeon X1950XTX reduce its idle power consumption to more manageable levels.

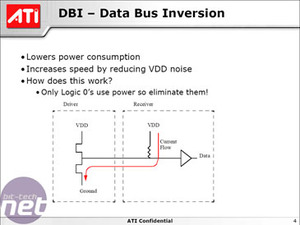

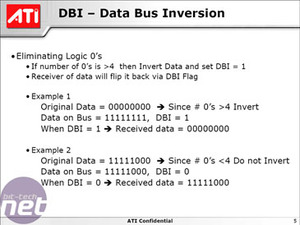

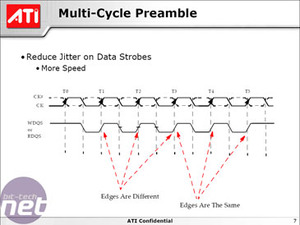

Data Bus Inversion (DBI) is another method employed to further reduce memory power consumption. This technique basically attempts to eliminate logic 0's from memory, as they require more power than a logic 1. To achieve this, GDDR4 uses DBI to invert 8-bit logic 0 data sets (where there are more than four consecutive logic 0's) into logic 1's, setting a DBI flag to 1 to tell the memory controller that the data is inverted. As a result, when the memory controller comes back to collect the inverted data, it knows to convert it back to its original form before going any further.

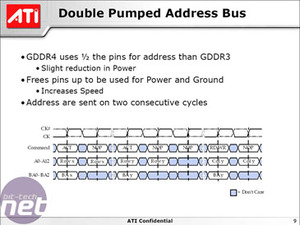

Along with this, there are fewer address pins on GDDR4 - half the number that GDDR3 has. This results in some slight power consumption reductions at the expense of latency. The address latency is double that of GDDR3, but the benefit is that the redundant pins can now be used for power and grounding, resulting in higher-attainable clock frequencies. Since bandwidth is generally more important than latency in graphics, the trade offs are worth it, in our opinion.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.